Memory

Intro

↪ Recently, have started study group seminar with my colleagues. We had a conference about what subject we start on and made the decision. Since the source code is loaded onto memory and the process runs, we decided to start our seminar with memory which had studied as an undergraduate. I’m going to roughly summarize about a memory allocation and fragmentation, and disk swaps as well.

Contiguous Allocation

Contiguous Allocation

↪ All processes are loaded and executed on memory, and the more resources they need to use, the higher the cost. This shows that it is important to allocate processes on memory as efficiently as possible.

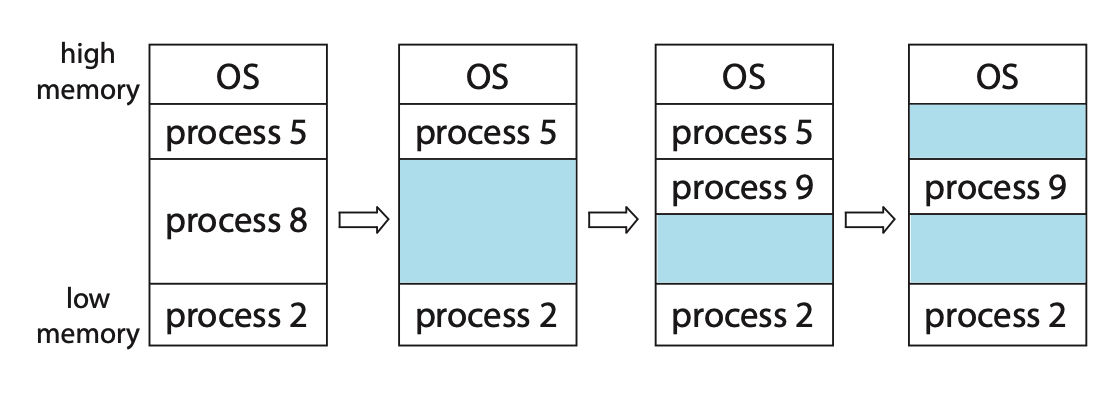

The first way to come up with it is to load the process into an empty space somewhere in memory. It’s a way to assign a process to run it in memory. However, there’s a problem with this method.

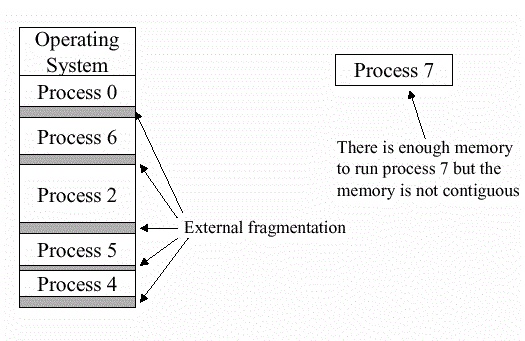

External Fragmentation

↪ As shown in the figure, the sum of the remaining memory spaces is sufficient, but there is not enough memory to put ‘Process 7’ on. This is called External Fragmentation.

So why don’t we put the rest of the process aside and put the remaining space together, just like we organize books on a bookshelf?

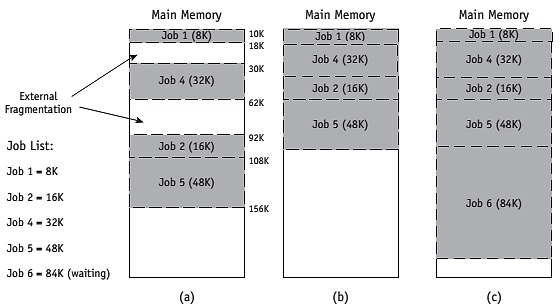

Compaction

↪ In terms of space, this method appears to be a flawless one. There can be no disagreement that memory is being used as efficiently as possible by eliminating all the fragmented space.

However, while this method may guarantee maximum efficiency with peripheral results, the more processes that need to be reallocated to memory, the more overheads that need to be reallocated to memory, does not appear to be very efficient.

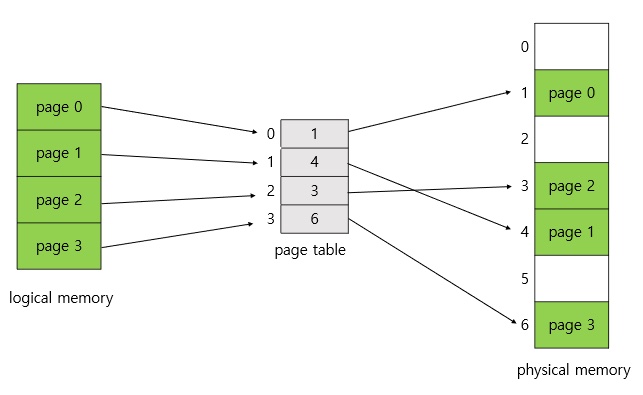

Paging

Paging

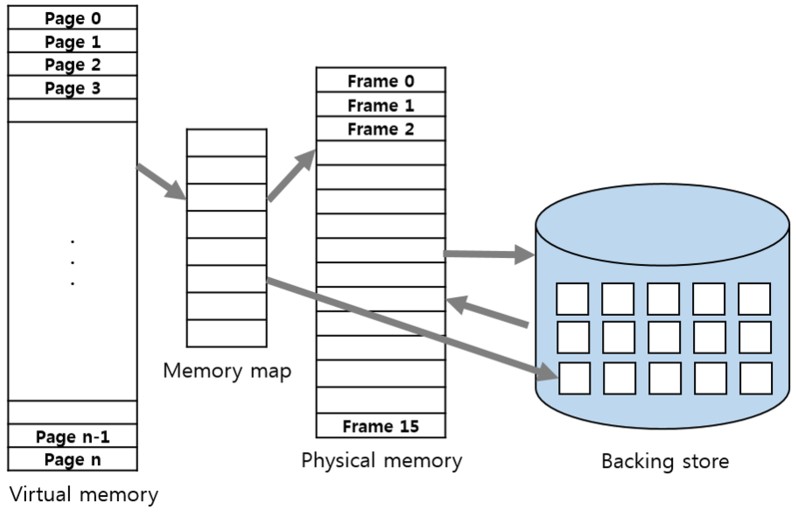

↪ After all, the standardization seems to be the best way to achieve efficiency. The paging technique divides memory into a set size called a frame. The process is separated to the frame size and becomes page. Naturally, the page order (logical address) in the process and the address (physical address) in the actual memory are different as we proceed with this memory allocation. The page table maps this address.

When you load these torn processes, pages, into memory, there’s no more external fragmentation.

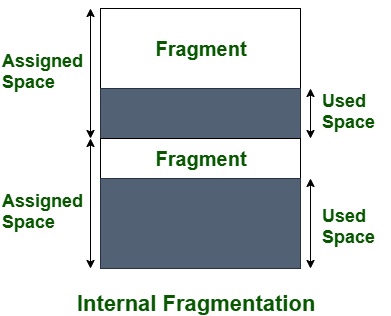

Internal Fragmentation

↪ However, not all processes can be separated by the size of the frame. This can be seen as internal fragment. For example, if there is the last page of a process that needs to use 3KB when paging is done with a 4KB frame, 1KB of space will be wasted. Of course, the smaller the frame size, the less likely this internal fragmentation will be, but the larger the overhead will be because the page mapping should occur that much.

Disk Swap

↪ If you divide the process into paging techniques and load it on memory, you will be given the physical and logical addresses of the memory. If you think about this, you can see how these concepts help you use memory.

In addition to the main memory, we can think of storage devices. They are auxiliary storage devices such as SSD and HDD. These auxiliary storage devices are slower in speed than memory, but they have much more capacity to free up, allowing them to be utilized to process memory paging. When there is not enough memory space, we assume a virtual memory space and allocate it as a logical address, but we actually read the page from the disk to allocate the memory physical address when referring to it. This is called page fault and this is called disk swap.

As previously stated, disk speeds are significantly slower than memory, which can result in bottlenecks, and frequent page faults can dramatically slow down overall program processing. Of course, with the advent of accelerated auxiliary memory devices like NVMe these days, there is less concern about these bottlenecks, which increase the availability of disk swaps.

댓글남기기